critical AI literacies for resisting and reclaiming

This summer, we will be running a course for professionals and academics on detecting, overcoming, and surpassing nonsense AI claims.

For more information and to apply, see the Radboud website for this course.

about

On this page are some resources for Critical AI Literacy (CAIL) from my perspective. Also see: the project homepage and this press release on our work.

As we say here, CAIL is:

an umbrella for all the prerequisite knowledge required to have an expert-level critical perspective, such as to tell apart nonsense hype from true theoretical computer scientific claims (see our project website). For example, the idea that human-like systems are a sensible or possible goal is the result of circular reasoning and anthropomorphism. Such kinds of realisations are possible only when one is educated on the principles behind AI that stem from the intersection of computer and cognitive science, but cannot be learned if interference from the technology industry is unimpeded. Unarguably, rejection of this nonsense is also possible through other means, but in our context our AI students and colleagues are often already ensnared by uncritical computationalist ideology. We have the expertise to fix that, but not always the institutional support.

CAIL also has the goal to repatriate university technological infrastructure and protect our students and selves from deskilling — as we explain here:

Within just a few years, AI has turbocharged the spread of bullshit and falsehoods. It is not able to produce actual, qualitative academic work, despite the claims of some in the AI industry. As researchers, as universities, we should be clearer about pushing back against these false claims by the AI industry. We are told that AI is inevitable, that we must adapt or be left behind. But universities are not tech companies. Our role is to foster critical thinking, not to follow industry trends uncritically.See more at — and please cite — the preprint here:

- Guest, O., Suarez, M., Müller, B., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. https://doi.org/10.5281/zenodo.17065099

deeper dives by me

I have created two longer-form pages on some issues that seem to keep coming up and centered on my perspective and work:

- Turing test — a collection of extracts from my work and from critical and gendered angles on this seemingly omnipresent undercurrent to modern AI thinking.

- We've been here before! — similarly, a collection of extracts and related points that continue to reappear when we contend with the rhetoric the AI industry throws at us.

video talks & interviews

- Millennials Are Killing Capitalism Live! (2025). The Tech Industry's "AI" Play for Education Dollars with Dwayne Monroe. https://www.youtube.com/live/sey7TS4JV68

- Organisers: Leonard Block Santos, Barbara Müller, & Iris van Rooij (2025). Critical AI Literacy: Empowering people to resist hype and harms in the age of AI — Symposium 2025. https://www.youtube.com/watch?v=Fxyg2UMq_no

- Interviewers: Kent Anderson & Joy Moore (2025). Safeguarding Science from AI: An Interview with Olivia Guest and Iris van Rooij. https://www.youtube.com/watch?v=p9w0FiHo1RU

blog posts, news, & opinion pieces

- Klee, M. (2025). AI Is Inventing Academic Papers That Don’t Exist — And They’re Being Cited in Real Journals. Rolling Stone. https://www.rollingstone.com/culture/culture-features/ai-chatbot-journal-research-fake-citations-1235485484/

- Glick, M. (2025). AI Might Not Harm Us in the Way You Think. Nautilus. https://nautil.us/ai-might-not-harm-us-in-the-way-you-think-1248498/

- Singer, N. (2025). Big Tech Makes Cal State Its A.I. Training Ground. New York Times. https://www.nytimes.com/2025/10/26/technology/cal-state-ai-amazon-openai.html

- Walraven, J. (2025). Belgian AI scientists resist the use of AI in academia. Apache. https://apache.be/2025/10/24/belgian-ai-scientists-resist-use-ai-academia

- Pearson, H. (2025). Universities are embracing AI: will students get smarter or stop thinking?. Nature. https://www.nature.com/articles/d41586-025-03340-w

- Guest, O. & van Rooij, I. (2025). AI Is Hollowing Out Higher Education. https://www.project-syndicate.org/commentary/ai-will-not-save-higher-education-but-may-destroy-it-by-olivia-guest-and-iris-van-rooij-2025-10

- Crawley, K. (2025). Anti Gen AI Heroes: Dr. Olivia Guest's Dire Warning to Academia. https://stopgenai.com/anti-gen-ai-heroes-dr-olivia-guests-dire-warning-to-academia/

- Guest, O., van Rooij, I, Müller, B., & Suarez, M. (2025). No AI Gods, No AI Masters. https://www.civicsoftechnology.org/blog/no-ai-gods-no-ai-masters

- Merchant, B. (2025). Cognitive scientists and AI researchers make a forceful call to reject "uncritical adoption" of AI in academia. https://www.bloodinthemachine.com/p/cognitive-scientists-and-ai-researchers/

- van Rooij, I. (2025). AI slop and the destruction of knowledge. https://doi.org/10.5281/zenodo.16905560

- Kilgore, W. (2025). Can We Build AI Therapy Chatbots That Help Without Harming People?. Forbes. https://www.forbes.com/sites/weskilgore/2025/08/01/can-we-build-ai-therapy-chatbots-that-help-without-harming-people/

- Huckins, G. (2025). How scientists are trying to use AI to unlock the human mind. Understanding the mind is hard. Understanding AI isn't much easier. MIT Technology Review. https://www.technologyreview.com/2025/07/08/1119777/scientists-use-ai-unlock-human-mind/

- Suarez, M., Müller, B.,Guest, O., & van Rooij, I. (2025). Critical AI Literacy: Beyond hegemonic perspectives on sustainability. https://doi.org/10.5281/zenodo.15677839

- van Rooij, I. & Guest, O. (2024). Don't believe the hype: AGI is far from inevitable. https://www.ru.nl/en/research/research-news/dont-believe-the-hype-agi-is-far-from-inevitable

research

- Guest, O., Suarez, M., & van Rooij, I. (2025). Towards Critical Artificial Intelligence Literacies. Zenodo. https://doi.org/10.5281/zenodo.17786243

- Guest, O. & Martin, A. E. (2025). A Metatheory of Classical and Modern Connectionism. Psychological Review. https://doi.org/10.1037/rev0000591

- Guest, O. & van Rooij, I. (2025). Critical Artificial Intelligence Literacy for Psychologists. PsyArXiv. https://doi.org/10.31234/osf.io/dkrgj_v1

- Guest, O., Suarez, M., Müller, B., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. https://doi.org/10.5281/zenodo.17065099

- Guest, O. (2025). What Does 'Human-Centred AI' Mean?. arXiv. https://doi.org/10.48550/arXiv.2507.19960

- van Rooij, I. & Guest, O. (2025). Combining Psychology with Artificial Intelligence: What could possibly go wrong?. PsyArXiv. https://doi.org/10.31234/osf.io/aue4m_v2

- Forbes, S. H. & Guest, O. (2025). To Improve Literacy, Improve Equality in Education, Not Large Language Models. Cognitive Science. https://doi.org/10.1111/cogs.70058

- van Rooij, I., Guest, O., Adolfi, F. G., de Haan, R., Kolokolova, A., & Rich, P. (2024). Reclaiming AI as a theoretical tool for cognitive science. Computational Brain & Behavior. https://doi.org/10.1007/s42113-024-00217-5

- van der Gun, L. & Guest, O. (2024). Artificial Intelligence: Panacea or Non-intentional Dehumanisation?. Journal of Human-Technology Relations. https://doi.org/10.59490/jhtr.2024.2.7272

- Guest, O. & Martin, A. E. (2023). On Logical Inference over Brains, Behaviour, and Artificial Neural Networks. Computational Brain & Behavior. https://doi.org/10.1007/s42113-022-00166-x

- Erscoi, L., Kleinherenbrink, A., & Guest, O. (2023). Pygmalion Displacement: When Humanising AI Dehumanises Women. SocArXiv. https://doi.org/10.31235/osf.io/jqxb6

- Birhane, A. & Guest, O. (2021). Towards Decolonising Computational Sciences. Women, Gender & Research. https://doi.org/10.7146/kkf.v29i2.124899

- Whitaker, K. J. & Guest, O. (2020). #bropenscience is broken science. The Psychologist. https://doi.org/10.5281/zenodo.4099011

Pygmalion Displacement: When Humanising AI Dehumanises Women

- Erscoi, L., Kleinherenbrink, A., & Guest, O. (2023). Pygmalion Displacement: When Humanising AI Dehumanises Women. SocArXiv. https://doi.org/10.31235/osf.io/jqxb6

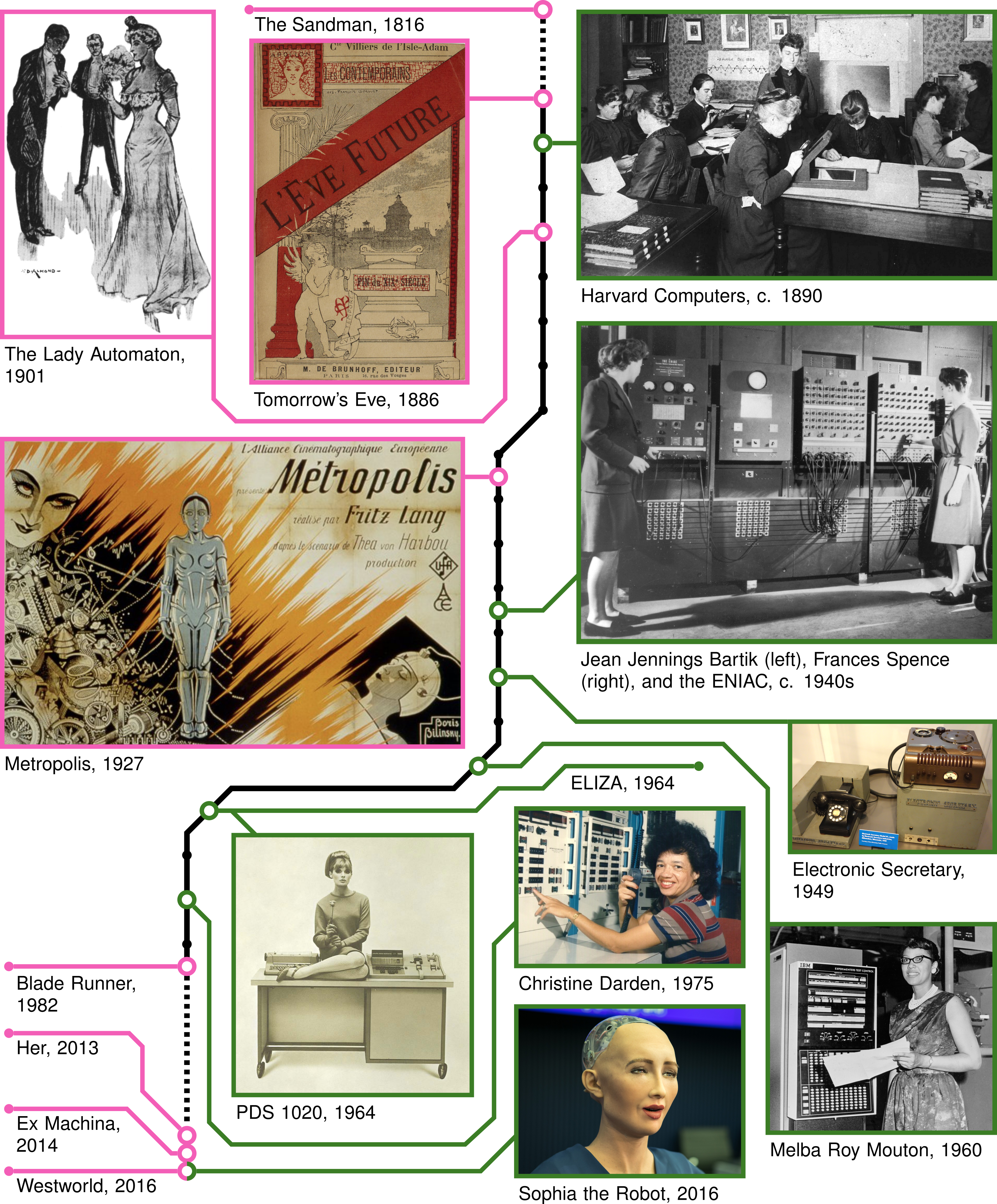

"This figure depicts, in the style of a printed circuit board, a timeline of events (many with related images) that involve Pygmalion displacement in one form or another. On the left [are] fictional instances of women’s and automata’s intertwined identities; from 1901 at the top to today’s film and television series at the bottom. On the right [are] historical individuals or artefacts." (Erscoi et al., 2023, figure 2)

Abstract: We use the myth of Pygmalion as a lens to investigate and frame the relationship between women and artificial intelligence (AI). Pygmalion was a legendary ancient king of Cyprus and sculptor. Having been repulsed by women, he used his skills to create a statue, which was imbued with life by the goddess Aphrodite. This can be seen as one of the primordial AI-like myths, wherein humanity creates intelligent life-like self-images to reproduce or replace ourselves. In addition, the myth prefigures historical and present gendered dynamics within the field of AI and between AI and society at large. Throughout history, the theme of women being replaced by inanimate objects (e.g. automata, algorithms) has been repeated, and continues to repeat in contemporary AI technologies. However, this socially detrimental pattern in technology — what we dub Pygmalion displacement — is often overlooked, whether due to naive excitement about new developments, or due to an unacknowledged sexist history of the field itself. As we demonstrate herein, Pygmalion displacement prefigures heavily, but in an unacknowledged way, in the original Turing test, the imitation game: a central thought experiment, foundational to AI. With women, and the feminine generally, being both dislocated and erased from and by technology, AI is and has been (presented as) created mainly by privileged men, subserving capitalist patriarchal ends. This poses serious dangers to women and other marginalised people. By tracing the historical and ongoing entwinement of femininity (from a patriarchal perspective) and AI, we aim to understand, make visible, and start a dialogue on the ways in which AI harms women.

"The series of questions that comprise the Pygmalion lens. For a given technosocial relationship between AI and people,if one or more of the answers are “Yes”, then we can conclude that (an aspect of) Pygmalion displacement is occurring, which is damaging to women, and the feminised broadly construed. If not, then the lens does not apply, and we remain agnostic as to gendered or other harm within this framework. Our proverbial lens should be taken inter alia to be akin to an optical lens which allows for the eye to see more details than when naked, as depicted in the line drawing below." (Guest et al., 2023, table 1)

| Pygmalion Lens | ||

|---|---|---|

| 1) | Feminised form: Is the AI, by its (default or exclusive) external characteristics, portraying a hegemonically feminine character? | Yes/No |

| 2) | Whitened form: Is the AI, by its (default or exclusive) external characteristics, portraying a character that is inherently white (supremacist), Western, Eurocentric, etc.? | Yes/No |

| 3) | Dislocation from work: Does the AI displace women from a role or occupation, or people in general from a role or occupation that tends to be (coded as) women's work? | Yes/No |

| 4) | Humanisation via feminisation: Are the AI's claims to intelligence, human-likeness or personhood contingent on stereotypical feminine traits or behaviours? | Yes/No |

| 5) | Competition with women: Is the AI pit (rhetorically or otherwise) against women in ways that favour it, and which are harmful to women? | Yes/No |

| 6) | Diminishment via false equivalence: Does the AI facilitate a rhetoric that deems women as not having full intellectual abilities, or as otherwise less deserving of personhood? | Yes/No |

| 7) | Obfuscation of diversity: Does the AI, through displacement of specific groups of people, “neutralise” (i.e., whiten, masculinise) a role, vocation, or skill? | Yes/No |

| 8) | Robot rights: Do the users and/or creators of the AI grant it (aspects of) legal personhood or human(-like) rights? | Yes/No |

| 9) | Social bonding: Do the users and/or creators of the AI develop interpersonal-like relationships with it? | Yes/No |

| 10) | Psychological service: Does the AI function to subserve and enhance the egos of its creators and/or users? | Yes/No |

Towards Critical Artificial Intelligence Literacies

- Guest, O., Suarez, M., & van Rooij, I. (2025). Towards Critical Artificial Intelligence Literacies. Zenodo. https://doi.org/10.5281/zenodo.17786243

Abstract: Critical Artificial Intelligence Literacies (CAILs) is the collection of ways of thinking about and relating to so-called artificial intelligence (AI) that rejects dominant frames presented by the technology industry, by naive computationalism, and by dehumanising ideologies. Instead, CAILs centre human cognition and uphold the integrity of academic research and education. We present a selection of CAILs across research and education, which we analyse into the following non-orthogonal dimensions: conceptual clarity, critical thinking, decoloniality, respecting expertise, and slow science. Finally, we note how we see the present with and without a wider adoption of CAILs — a fundamental aspect is the assertion that AI cannot be allowed to drive change, even positive change, in education or research. Instead cultivation of and adherence to shared values and goals must guide us. Ultimately, CAILs minimally ask us to contemplate how we as academics can stop AI companies from wielding so much power.

"The important dimensions of CAILs across research and education; clockwise from 12 o’clock: Conceptual Clarity is the idea that terms should refer. Critical Thinking is deep engagement with the relationships between statements about the world. Decoloniality is the process of de-centring and addressing dominant harmful views and practices. Respecting Expertise is the epistemic compact between professionals and society. Slow Science is a disposition towards preferring psychologically, techno-socially, and epistemically healthy practices. The lines between dimensions represent how they are interwoven both directly and indirectly." (Guest et al., 2025, figure 1)

Against the Uncritical Adoption of 'AI' Technologies in Academia

- Guest, O., Suarez, M., Müller, B., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. https://doi.org/10.5281/zenodo.17065099

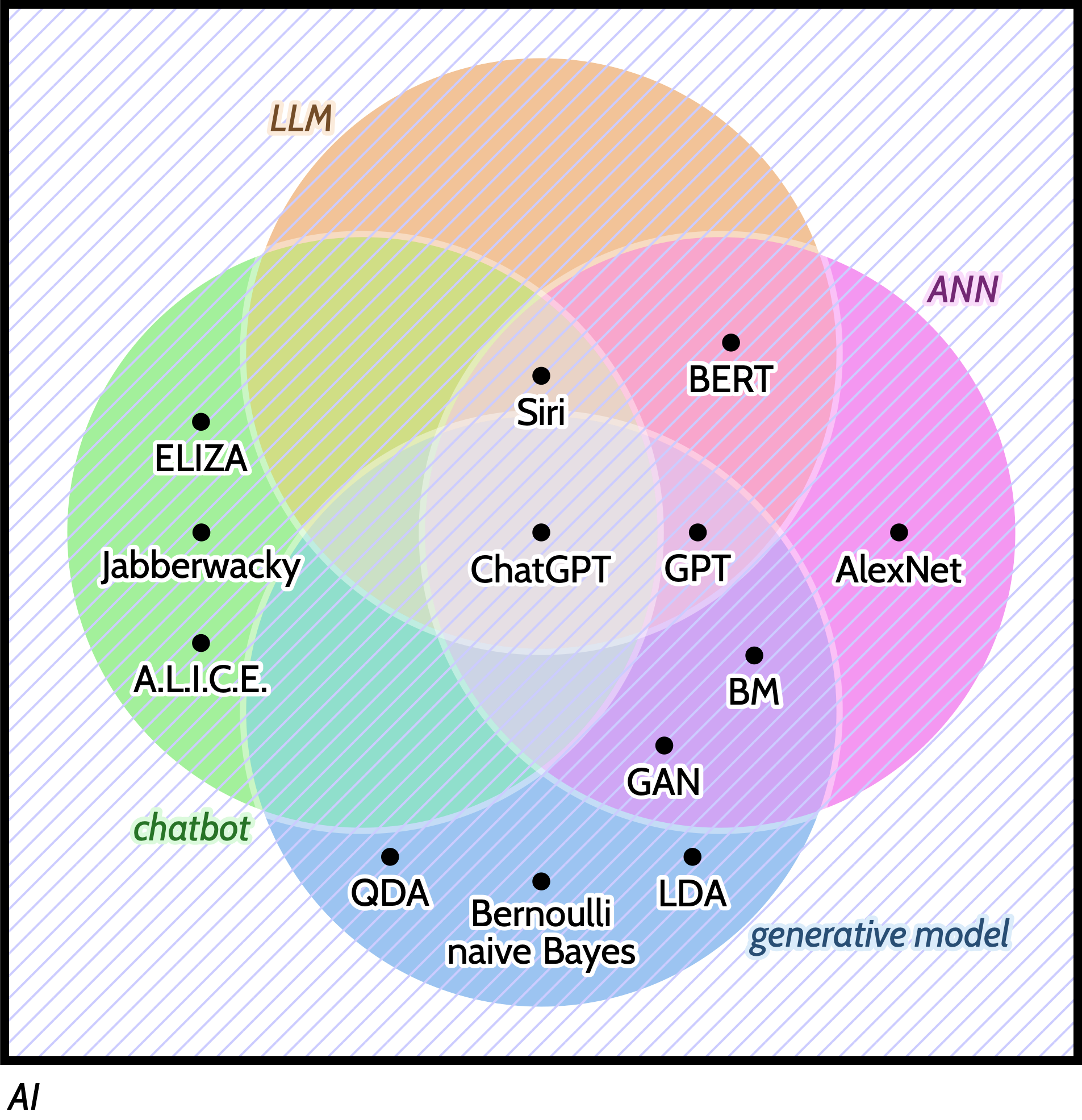

"A cartoon set theoretic view on various terms (see Table 1) used when discussing the superset AI" (Guest et al., 2025, figure 1)

Abstract: Under the banner of progress, products have been uncritically adopted or even imposed on users — in past centuries with tobacco and combustion engines, and in the 21st with social media. For these collective blunders, we now regret our involvement or apathy as scientists, and society struggles to put the genie back in the bottle. Currently, we are similarly entangled with artificial intelligence (AI) technology. For example, software updates are rolled out seamlessly and non-consensually, Microsoft Office is bundled with chatbots, and we, our students, and our employers have had no say, as it is not considered a valid position to reject AI technologies in our teaching and research. This is why in June 2025, we co-authored an Open Letter calling on our employers to reverse and rethink their stance on uncritically adopting AI technologies. In this position piece, we expound on why universities must take their role seriously to a) counter the technology industry's marketing, hype, and harm; and to b) safeguard higher education, critical thinking, expertise, academic freedom, and scientific integrity. We include pointers to relevant work to further inform our colleagues.

"Below some of the typical terminological disarray is untangled. Importantly, none of these terms are orthogonal nor do they exclusively pick out the types of products we may wish to critique or proscribe." (Guest et al., 2025, table 1)

| Term | Description | Resources |

|---|---|---|

| Artificial Intelligence (AI) | The phrase 'artificial intelligence' was coined by McCarthy et al. (1955) in the context of proposing a summer workshop at Dartmouth College in 1956. They assumed significant progress could be made on making machines think like people. In the present, AI has no fixed meaning. It can be anything from a field of study to a piece of software. | Avraamidou (2024), Bender and Hanna (2025), Bloomfield (1987), Boden (2006), Brennan et al. (2025), Crawford (2021), Guest (2025), Hao (2025), McCorduck (2004), McQuillan (2022), Monett (2021), Vallor (2024), and van Rooij, Guest, et al. (2024). |

| Artificial neural network (ANN) | First proposed in McCulloch and Pitts (1943), it is a mathematical model, comprised of interconnected banks of units that perform matrix multiplication and non-linear functions. These statistical models are exposed to data (input-output pairs) that they aim to reproduce. While held to be inspired by the brain, such claims are tenuous or misleading. | Abraham (2002), Bishop (2021), Boden (2006), Dhaliwal et al. (2024), Guest and Martin (2023, 2025a), Hamilton (1998), Stinson (2018, 2020), and Wilson (2016). |

| Chatbot | An engineered system that appears to converse with the user using text or voice. Speech synthesis goes back hundreds of years (Dudley 1939; Gold 1990; Schroeder 1966) and Weizenbaum's (1966) ELIZA is considered the first chatbot (Dillon 2020). Modern versions can contain ANNs in addition to hardcoded rules. | Bates (2025), Dillon (2020), Elder (2022), Erscoi et al. (2023), Schlesinger et al. (2018), Strengers et al. (2024), Turkle (1984), and Turkle et al. (2006). |

| ChatGPT | A proprietary closed source chatbot created by OpenAI. The for-profit company OpenAI has been steeped in hype from inception. It does not provide source code for most of its models, violating open science principles for academic users. OpenAI reported $5 billion in losses in 2024 (Reuters 2025), and has received $13 billion from Microsoft (Levine 2024). | Andhov (2025), Birhane and Raji (2022), Dupré (2025), Gent (2024), M. T. Hicks et al. (2024), Hill (2025), Jackson (2024), Kapoor et al. (2024), Liesenfeld, Lopez, et al. (2023), Mirowski (2023), Perrigo (2023), Titus (2024), and Widder et al. (2024). |

| Generative model | A specification on the type of statistical distribution modelled; typically contrasted with discriminative model. ANNs can be generative (e.g. Boltzmann machines) or discriminative (e.g. convolutional neural networks used for classifying images). In the context of generative AI or generative pre-trained transformer (GPT), this phrase is used inconsistently. | Efron (1975), Jebara (2004), Mitchell (1997), Ng and Jordan (2001), and Xue and Titterington (2008). |

| Large language model (LLM) | A model that captures some aspect of language, with the term 'large' denoting that the number of parameters exceed a certain threshold. Modern chatbots are often LLMs, which use ANNs, along with a graphical interface so that users can input so-called text 'prompts.' LLMs can be generative, discriminative, or neither. | Bender, Gebru, et al. (2021), Birhane and McGann (2024),Dentella et al. (2023, 2024), Leivada, Dentella, et al. (2024), Leivada, Günther, et al. (2024), Luitse and Denkena (2021), Shojaee et al. (2025a), Villalobos et al. (2024), and Wang et al. (2024) |

Critical Artificial Intelligence Literacy for Psychologists

- Guest, O. & van Rooij, I. (2025). Critical Artificial Intelligence Literacy for Psychologists. PsyArXiv. https://doi.org/10.31234/osf.io/dkrgj_v1

Abstract: Psychologists — from computational modellers to social and personality researchers to cognitive neuroscientists and from experimentalists to methodologists to theoreticians — can fall prey to exaggerated claims about artificial intelligence (AI). In social psychology, as in psychology generally, we see arguments taken at face value for: a) the displacement of experimental participants with opaque AI products; the outsourcing of b) programming, c) writing, and even d) scientific theorising to such models; and the notion that e) human-technology interactions could be on the same footing as human-human (e.g., client-therapist, student-teacher, patient-doctor, friendship, or romantic) relationships. But if our colleagues are, accidentally or otherwise, promoting such ideas in exchange for salary, grants, or citations, how are we as academic psychologists meant to react? Formal models, from statistics and computational methods broadly, have a potential obfuscatory power that is weaponisable, laying serious traps for the uncritical adopters, with even the term `AI' having murky referents. Herein, we concretise the term AI and counter the five related proposals above — from the clearly insidious to those whose ethical neutrality is skin-deep and whose functionality is a mirage. Ultimately, contemporary AI is research misconduct.

"Core reasoning issues (first column), which we name after the relevant numbered section, are characterised using a plausible quote. In the second column are responses per row; also see the named section for further reading, context, and explanations." (Guest & van Rooij, 2025, table 1)

| Uncritical Statement | Possible Response |

|---|---|

| Lies, Damned Lies, and Statistics "AI products are outside my expertise but I think it is useful to deploy them." |

As a matter of fact these products are statistical models, akin to logistic regression, which all psychologists even undergraduate students are required to have a familiarity with. Additionally, it is required to know the differences between models used to perform statistical inference and those that are models of cognition. As is knowing basic open science principles. Therefore, it should come as no shock that assuming the mantle of the non-expert here is inappropriate, and in fact may even be a form of QRP to abandon critical thinking. |

| Displacement of Participants "I can use AI instead of participants to perform tasks and generate data." |

The provenance of the data used in these models indicates it is not ethically sourced, falling below standards for our discipline, involving sweatshop labour and no consent for private data used in experiments. The output can contain direct original input data (i.e. double dipping), but smoothed to remove outliers, conform to our pre-existing ideas of what it should look like (data fabrication), and all-round irreplicable. Psychology is meant to study humans, not patterns at the output of biased statistical models. |

| Outsourcing Programming to Companies "I can use AI for programming experimental paradigms and statistical analyses." |

This is an example of the field’s backsliding from adopting open science and programming skills. No formal specification will be given for code generated from a corporate-owned opaque model. The psychologist now has no reason to learn how to engineer software, and disturbingly might as well switch back to propriety software like SPSS which at least has documentation and explicit versions. Code at the output will be plagiarised, making it time-consuming to check compliance with our needs than if we wrote the code ourselves, and violating openness. |

| Ghostwriter in the Machine "I can use AI for understanding the literature and for scholarly writing." |

This practice implicates a swathe of issues akin to automating the paper mill. First, the literature is screened by corporations, which have every reason to control the output of the model to suit their needs or minimally to ignore output issues, such as sexism. Second, the fabrication of non-existent citations which makes claims worse than baseless because they appear supported by prior work. Third, the dislocation of text from the literature since no provenance can be established, resulting in plagiarism. |

| The End of Scientific Theory "I can outsource verbal theorising to AI or use it as a formal cognitive model." |

This not only adds to the dislocation of work from its evidential and historical basis, but also it impedes our theorising about phenomena and systems under study. In this context, we are interested in human-understandable theory and theory-based models, not statistical models which provide only a representation of the data. Scientific theories and models are only useful if we, the scientists who build and use them, understand them in deep ways and they connect transparently to research questions. AI product use is absconding scientific duty. |

| Equivocation of Human-Human & Human-AI "I can study people using chatbots as if they are socially interacting." |

Seeing client-therapist, student-teacher, patient-doctor, friendship, or romantic relationships as equivalent to those between people and artifacts is both a form of dehumanisation and a hollowing out of the target of study in social psychology: the relationship between people and other people. It is important to study the relations between humanity and machines and the social interactions mediated through technology — but to place interactions with chatbots in the same category as those between people assumes and risks too much. |

Here are some related academics' and scholars' websites (feel free to contact me to add more):

- Refusing GenAI in Writing Studies: A Quickstart Guide, by Jennifer Sano-Franchini, Megan McIntyre, & Maggie Fernandes.

- AGAINST AI, by Anna Kornbluh, Krista Muratore, & Eric Hayot.

- Computational Impacts: Tech Industry Critique Without Tech-bro-ism, by Dwayne Monroe.

- Stop Gen AI, by Kim Crawley.

- I would be so ashamed to use generative AI, here’s why, by Sarah Winnicki.

- Against Generative AI, by Cate Denial.

Open Letters

Colleagues and I have written and published: Open Letter: Stop the Uncritical Adoption of AI Technologies in Academia.

Please consider adapting the letter for your employer; here are some allies' efforts inspired by our letter:

- An open letter from educators who refuse the call to adopt GenAI in education, by Melanie Dusseau and Miriam Reynoldson.

- Stop AI in Malden Schools — against AI use Malden, Greater Boston, MA, USA.

- Sabrina Mittermeier created a German version of our letter: Gegen die unkritische Anwendung und Implementierung sog. Künstlicher Intelligenz (KI) in der deutschen Wissenschaft und im Hochschulalltag.

have you considered not using AI?

I made some posters, in the style of this website, to use to attract attention to more critical thinking about AI: Download them as PDFs here. The QR code points to:

- Guest, O., Suarez, M., Müller, B., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. https://doi.org/10.5281/zenodo.17065099