about

Hi! I am an Assistant Professor of Computational Cognitive Science. I work in the Department of Cognitive Science and Artificial Intelligence in the Donders Centre for Cognition and the School of Artificial Intelligence at Radboud University in the Netherlands.

My research interests comprise (meta)theoretical, critical, and radical perspectives on the neuro-, computational, and cognitive sciences broadly construed. See my list of publications for more.

I am committed to equity, diversity, and inclusion in (open) science, promoting access to technical skills training — including the broader decolonisation of cognitive and computational sciences. Relatedly, Christina Bergmann and I maintain a list of underrepresented cognitive computational scientists.

biography

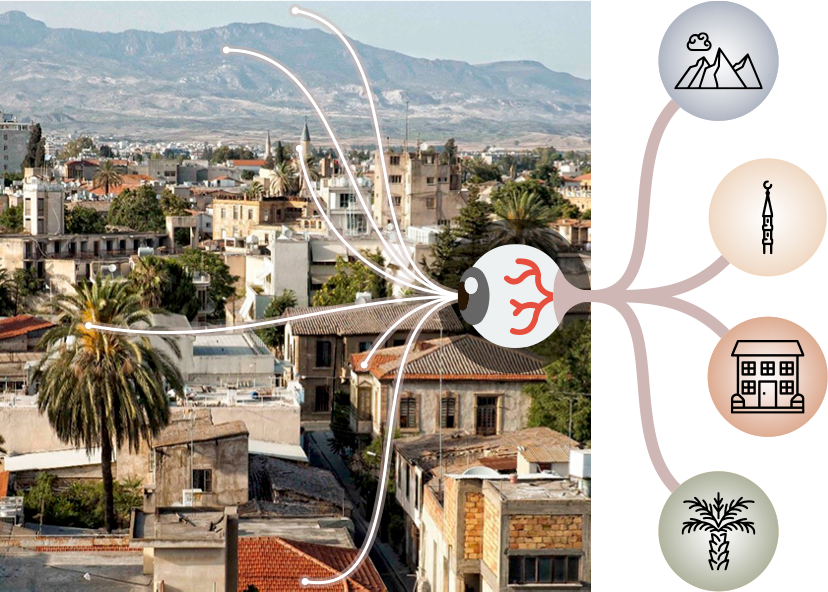

I emigrated from Cyprus to the United Kingdom in 2006 to pursue an undergraduate degree in Computer Science (2009; University of York, UK). After that, I moved on to an MSc in Cognitive and Decision Sciences (2010; University College London, UK). I then undertook a PhD in Psychological Sciences (2014; Birkbeck, UK), specifically on computational models for semantic memory.

Since obtaining my PhD, I have worked in labs at the University of Oxford, University College London, and as an independent scientist at an EU-funded research centre in Cyprus. In 2020, I moved to the Netherlands to work with Andrea E. Martin, before starting as an Assistant Professor at the Radboud in 2021 where I still work.

research interests

If you are interested in working with me, feel free to contact me. Prior to that, it might be worth taking a look at the following themes of research that currently interest me:

- Critical perspectives on AI, its history, its current state, including projects about artificial neural networks, sans hype (e.g. Guest, 2025; Guest & Martin, 2023, 2025b).

- (Meta)theory in neuro-, cognitive, computational, and psychological sciences (e.g. Guest, 2024).

- Human categorisation and models thereof from a perspective of what is lacking in our framing of this cognitive capacity (e.g. Natalia Scharfenberg's PhD project).

- Taking computationalism seriously in divergence from naive perspectives and/or as a reductio (e.g. Guest & Martin, 2025a; Guest et al., 2025, van Rooij et al., 2024).

news

- 27th June 2025: Colleagues and I have written and published: Open Letter: Stop the Uncritical Adoption of AI Technologies in Academia.

- 9th July 2025: The AI as a Science: Course Manual (taught from 2020/21 to 2024/25) is publicly accessible.

Modern Alchemy: Neurocognitive Reverse Engineering

- Guest, O., Scharfenberg, N., & van Rooij, I. (2025). Modern Alchemy: Neurocognitive Reverse Engineering. PhilSci-Archive. https://philsci-archive.pitt.edu/25289

Abstract: The cognitive sciences, especially at the intersections with computer science, artificial intelligence, and neuroscience, propose `reverse engineering' the mind or brain as a viable methodology. We show three important issues with this stance: 1) Reverse engineering proper is not a single method and follows a different path when uncovering an engineered substance versus a computer. 2) These two forms of reverse engineering are incompatible. We cannot safely reason from attempts to reverse engineer a substance to attempts to reverse engineer a computational system, and vice versa. Such flawed reasoning rears its head, for instance, when neurocognitive scientists reason about what artificial neural networks and brains have in common using correlations or structural similarity. 3) While neither type of reverse engineering can make sense of non-engineered entities, both are applied in incompatible and mix-and-matched ways in cognitive scientists' thinking about computational models of cognition. This results in treating mind as a substance; a methodological manoeuvre that is, in fact, incompatible with computationalism. We formalise how neurocognitive scientists reason (metatheoretical calculus) and show how this leads to serious errors. Finally, we discuss what this means for those who ascribe to computationalism, and those who do not.

| Reverse Engineering Proper | ||

|---|---|---|

| Porcelain, substance | ЕС ЭВМ, computer | |

| 1) Computation performed | none or identity function | (universal) Turing machine |

| 2) Equivalence sought | structural | functional |

| 3) Multiple realisation | minimally or uniquely realisable | massively or infinitely realisable |

| 4) Search duration | thousands or hundreds of years | single digit number of years |

| 5) Search strategy | industrial espionage, alchemy | industrial espionage, engineering |

| 6) Solution instances | very few, one to three | infinite |

| 7) Verification method | correlation | principles of computation |

"Comparison of the differences between two historical cases of true reverse engineering. [...] In both cases, to both limit the search space (for both it is unbounded) and search time (row 4) complexities, peeking at the solution (row 5) was a necessary part of reverse engineering." (Guest et al, 2025, table 1)

Are Neurocognitive Representations 'Small Cakes'?

- Guest, O. & Martin, A. E. (2025). Are Neurocognitive Representations 'Small Cakes'?. PhilSci-Archive. https://philsci-archive.pitt.edu/24834

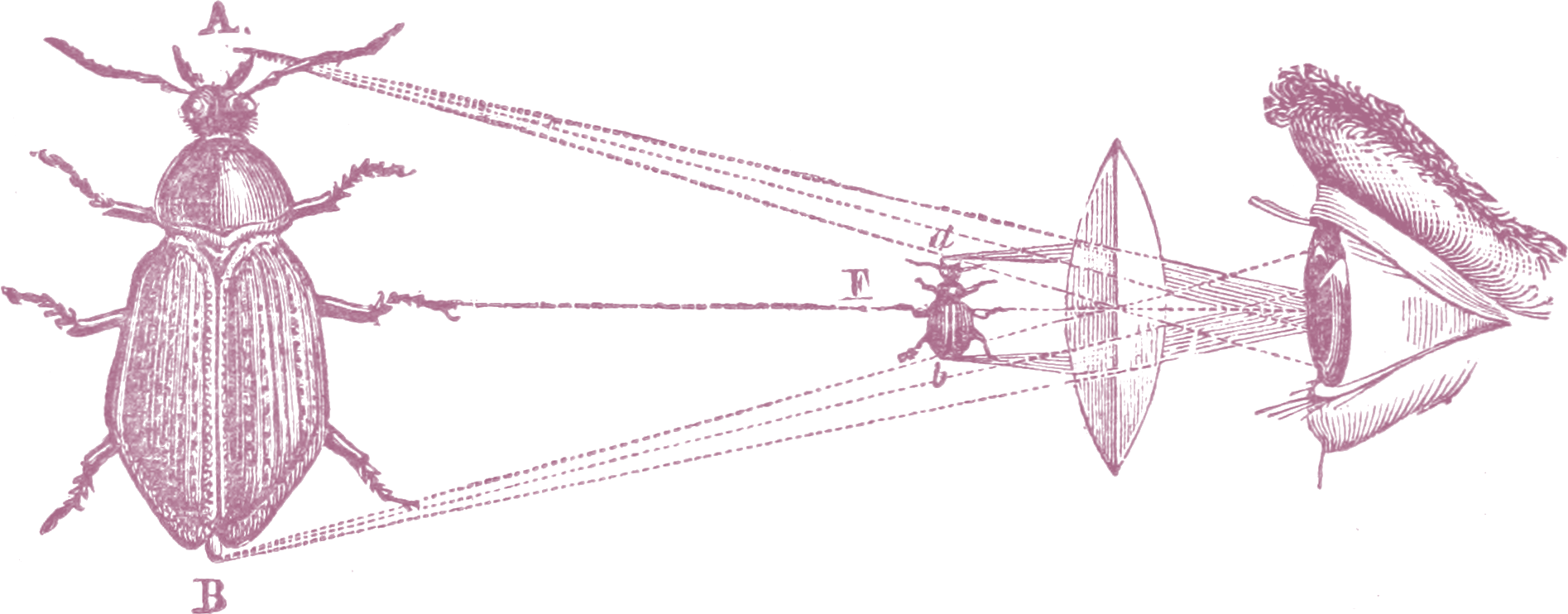

"Cartoon depiction of the methodology scientists deploy when they investigate neurocognitive phenomena or capacities." (Guest & Martin, 2025, figure 1)

Abstract: In order to understand cognition, we often recruit analogies as building blocks of theories to aid us in this quest. One such attempt, originating in folklore and alchemy, is the homunculus: a miniature human who resides in the skull and performs cognition. Perhaps surprisingly, this appears indistinguishable from the implicit proposal of many neurocognitive theories, including that of the 'cognitive map,' which proposes a representational substrate for episodic memories and navigational capacities. In such 'small cakes' cases, neurocognitive representations are assumed to be meaningful and about the world, though it is wholly unclear who is reading them, how they are interpreted, and how they come to mean what they do. We analyze the 'small cakes' problem in neurocognitive theories (including, but not limited to, the cognitive map) and find that such an approach a) causes infinite regress in the explanatory chain, requiring a human-in-the-loop to resolve, and b) results in a computationally inert account of representation, providing neither a function nor a mechanism. We caution against a 'small cakes' theoretical practice across computational cognitive modelling, neuroscience, and artificial intelligence, wherein the scientist inserts their (or other humans') cognition into models because otherwise the models neither perform as advertised, nor mean what they are purported to, without said 'cake insertion.' We argue that the solution is to tease apart explanandum and explanans for a given scientific investigation, with an eye towards avoiding van Rooij's (formal) or Ryle's (informal) infinite regresses.

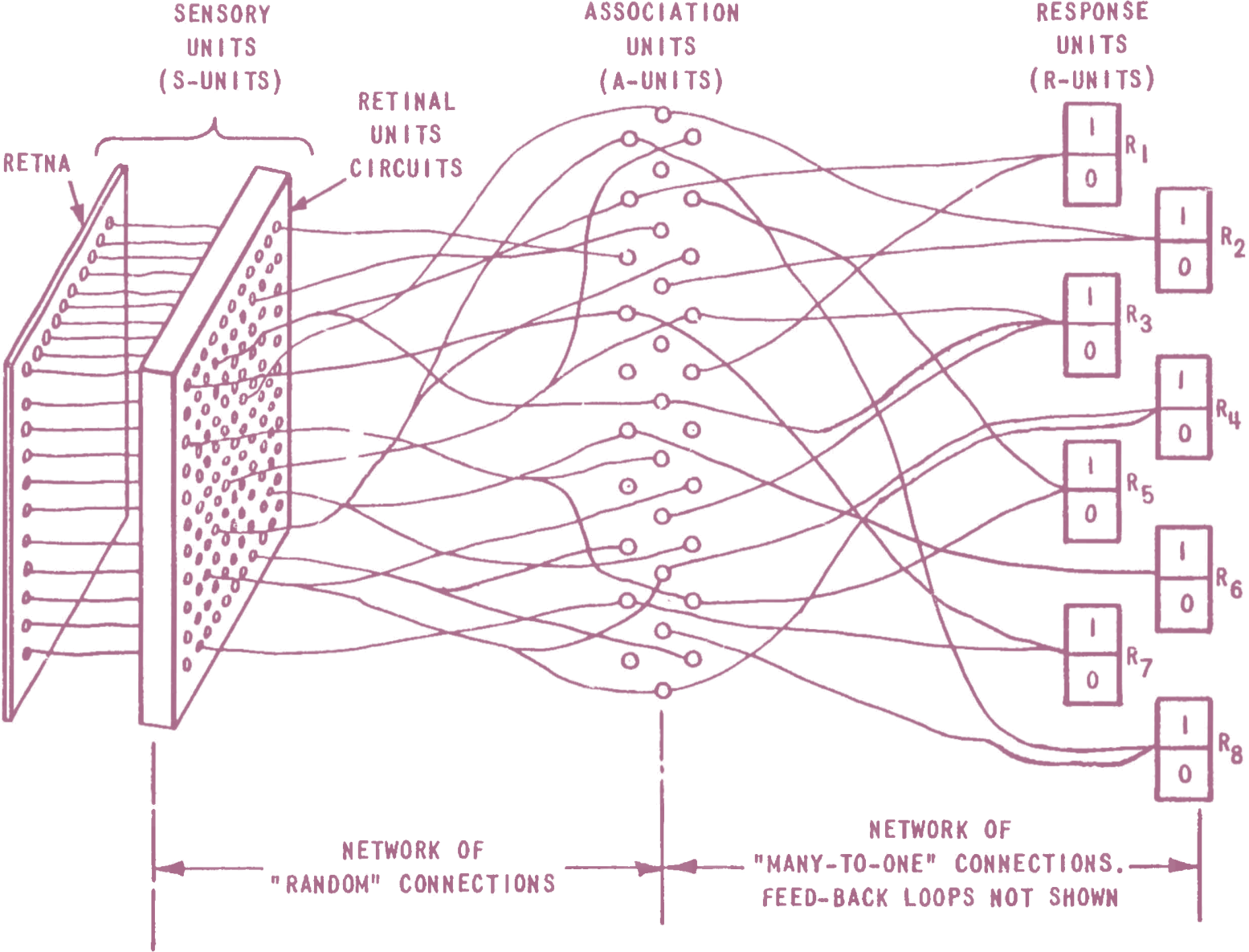

A Metatheory of Classical and Modern Connectionism

- Guest, O. & Martin, A. E. (2025). A Metatheory of Classical and Modern Connectionism. Psychological Review. https://doi.org/10.1037/rev0000591

Abstract: Contemporary AI models owe much of their success and discontents to connectionism, a framework in cognitive science that has been (and continues to be) highly influential. Herein, we analyze artificial neural networks (ANNs): a) when used as scientific instruments of study; and b) when functioning as emergent arbiters of the zeitgeist in the cognitive, computational, and neural sciences. Building on our previous work with respect to analogizing between ANNs and cognition, brains, or behaviour (Guest & Martin, 2023), we use metatheoretical analysis techniques (Guest, 2024), including formal logic, to characterise two distinct tendencies within connectionism that we dub classical and modern, with divergent properties, e.g. goals, mechanisms, scientific questions. We also demonstrate how we, as a field, often fail to follow important lines of argument to their end — this results in a paradoxical praxis. By engaging more deeply with (meta)theory surrounding ANNs, our field can obviate the cycle of AI winters and summers, which need not be inevitable.

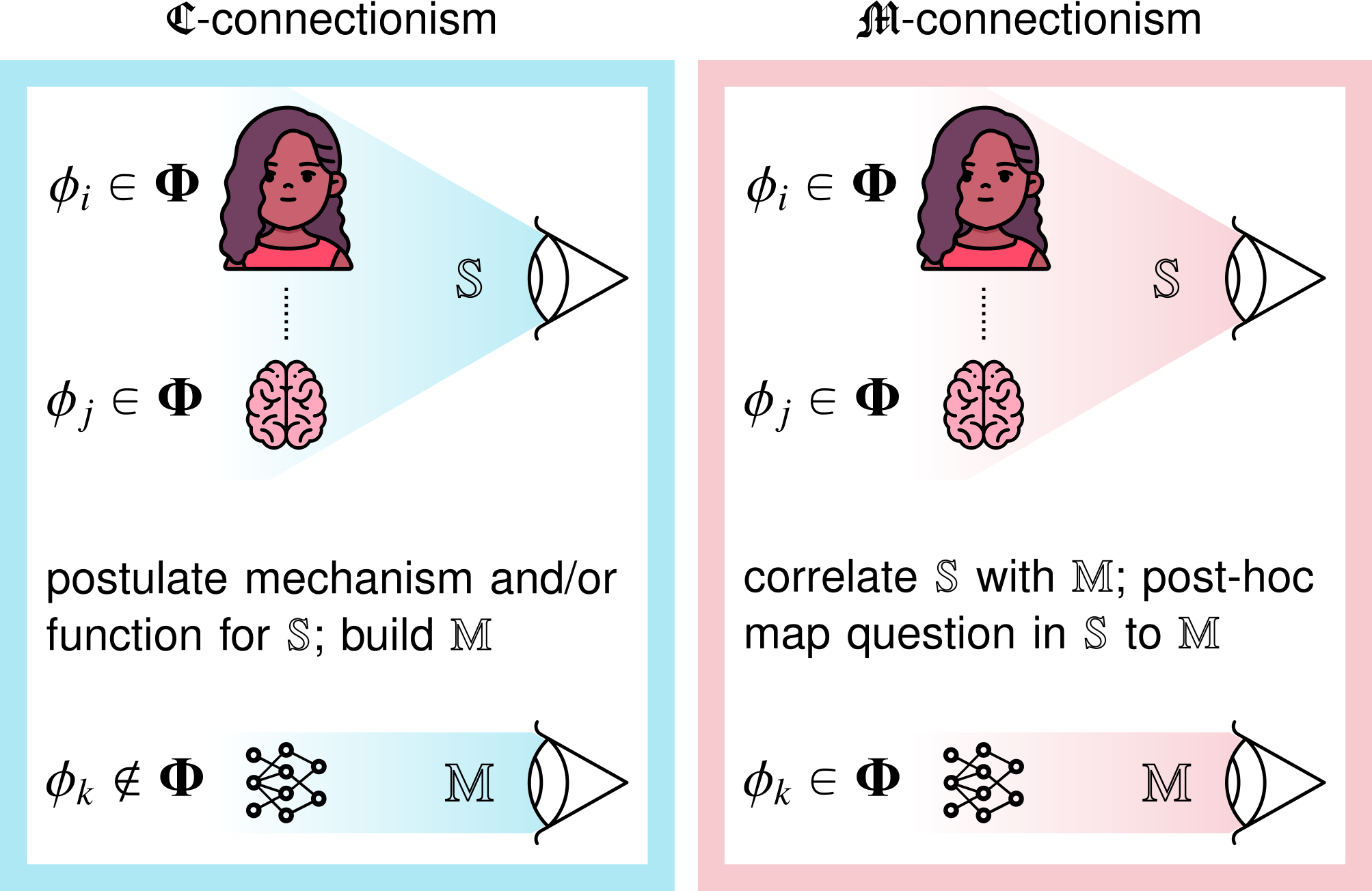

"A cartoon depiction of the simplified differences between C- and M-connectionism with respect to postulating mechanisms and building models (collectively M), and relating them to the cognitive and neural systems (collectively S)." (Guest & Martin, 2025, figure 2)

Publications

(selected)- Guest, O., Suarez, M., & van Rooij, I. (2025). Towards Critical Artificial Intelligence Literacies. Zenodo. https://doi.org/10.5281/zenodo.17786243

- Guest, O. & Martin, A. E. (2025). A Metatheory of Classical and Modern Connectionism. Psychological Review. https://doi.org/10.1037/rev0000591

- Guest, O. & van Rooij, I. (2025). Critical Artificial Intelligence Literacy for Psychologists. PsyArXiv. https://doi.org/10.31234/osf.io/dkrgj_v1

- Guest, O., Suarez, M., Müller, B., et al. (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. https://doi.org/10.5281/zenodo.17065099

- Guest, O. (2025). What Does 'Human-Centred AI' Mean?. arXiv. https://doi.org/10.48550/arXiv.2507.19960

- van Rooij, I. & Guest, O. (2025). Combining Psychology with Artificial Intelligence: What could possibly go wrong?. PsyArXiv. https://doi.org/10.31234/osf.io/aue4m_v1

- Guest, O., Scharfenberg, N., & van Rooij, I. (2025). Modern Alchemy: Neurocognitive Reverse Engineering. PhilSci-Archive. https://philsci-archive.pitt.edu/25289

- Forbes, S. H. & Guest, O. (2025). To Improve Literacy, Improve Equality in Education, Not Large Language Models. Cognitive Science. https://doi.org/10.1111/cogs.70058

- Guest, O. & Martin, A. E. (2025). Are Neurocognitive Representations 'Small Cakes'?. PhilSci-Archive. https://philsci-archive.pitt.edu/24834

- van Rooij, I., Guest, O., Adolfi, F. G., de Haan, R., Kolokolova, A., & Rich, P. (2024). Reclaiming AI as a theoretical tool for cognitive science. Computational Brain & Behavior. https://doi.org/10.1007/s42113-024-00217-5

- Guest, O. & Forbes, S. H. (2024). Teaching coding inclusively: if this, then what?. Tijdschrift voor Genderstudies. https://doi.org/10.5117/TVGN2024.2-3.007.GUES

- van der Gun, L. & Guest, O. (2024). Artificial Intelligence: Panacea or Non-intentional Dehumanisation?. Journal of Human-Technology Relations. https://doi.org/10.59490/jhtr.2024.2.7272

- Guest, O. (2024). What Makes a Good Theory, and How Do We Make a Theory Good?. Computational Brain & Behavior. https://doi.org/10.1007/s42113-023-00193-2

- Guest, O. & Martin, A. E. (2023). On Logical Inference over Brains, Behaviour, and Artificial Neural Networks. Computational Brain & Behavior. https://doi.org/10.1007/s42113-022-00166-x

- Erscoi, L., Kleinherenbrink, A., & Guest, O. (2023). Pygmalion Displacement: When Humanising AI Dehumanises Women. SocArXiv. https://doi.org/10.31235/osf.io/jqxb6

- Birhane, A. & Guest, O. (2021). Towards Decolonising Computational Sciences. Women, Gender & Research. https://doi.org/10.7146/kkf.v29i2.124899

- Guest, O. & Martin, A. E. (2021). How Computational Modeling Can Force Theory Building in Psychological Science. Perspectives on Psychological Science. https://doi.org/10.1177/1745691620970585

- Guest, O., Caso, A., & Cooper, R. P. (2020). On Simulating Neural Damage in Connectionist Networks. Computational Brain & Behavior. https://doi.org/10.1007/s42113-020-00081-z

- Cooper, R. P. & Guest, O. (2014). Implementations are not specifications: specification, replication and experimentation in computational cognitive modeling. Cognitive Systems Research. https://doi.org/10.1016/j.cogsys.2013.05.001